Home network crawler - cataloging every file on the home LAN with C# and MongoDB

Map-Reduce in action: The glaciers in Greenland 'map' the canyon walls into streams of rocks called lateral moraine. As the glaciers merge these rocks are 'reduced' into streams in the middle called 'medial' moraine. (A photo I took over Greenland this summer.)

With the addition of two more 3TB drives to the home network it's becoming

impossible to track files and to remember where each one is and whether

it's a backup of some other disk or not. There are 8 computers on the

home network and over 10TB of storage distributed between them. Much of

the storage is concentrated on a single machine running Windows Server

2008. It's a low-powered Atom

server connected to

a Sans Digital 1U Rackmount Sans Digital disk

array

I'm not a huge fan or RAID arrays - they mostly mean there's another component to go wrong (the controller card) and when they do go wrong you can lose all your data just as easily as if it were all on one drive. I prefer a multiple copy strategy, an "Amazon S3 for the home" if you like. The downside of this is that there are multiple copies of each file across the home network and as I have several generations of hard drives the mapping from primary to secondary to tertiary is complex and hard to manage! It's also really hard to find a single file when there are so many places to look and it's nigh on impossible to be sure that I have the necessary three copies of every important file in the right places at all times.

So this weekend I embarked on a small project to catalog every file, directory and storage volume on the entire home network including drives that are only sometimes connected. The software has been running all weekend and is close to cataloging everything. It's found 5 million files so far representing over 6TB of data!

The architecture I chose for this software was an agent that runs on each PC to catalog all of the attached volumes. This client uploads all the directories and files that it finds to a MongoDB database running on the same Atom server as the main storage array. The poor little Atom server's 4GB of RAM has been in constant use but the server has remained responsive, in part because it boots from an SSD drive.

Each volume, directory and file is represented by a document in MongoDB in a single collection. The agent calculates an MD5 hash for each file and extracts metadata from MP3, WMA and JPG files. It also stores all of the key file dates (created, updated, accessed) and references to parent directories, volume identifiers and the currently connected PC. It does not assume that a volume is always connected to the same computer - you can unplug an external drive from one and put it somewhere else and it will all work just fine.

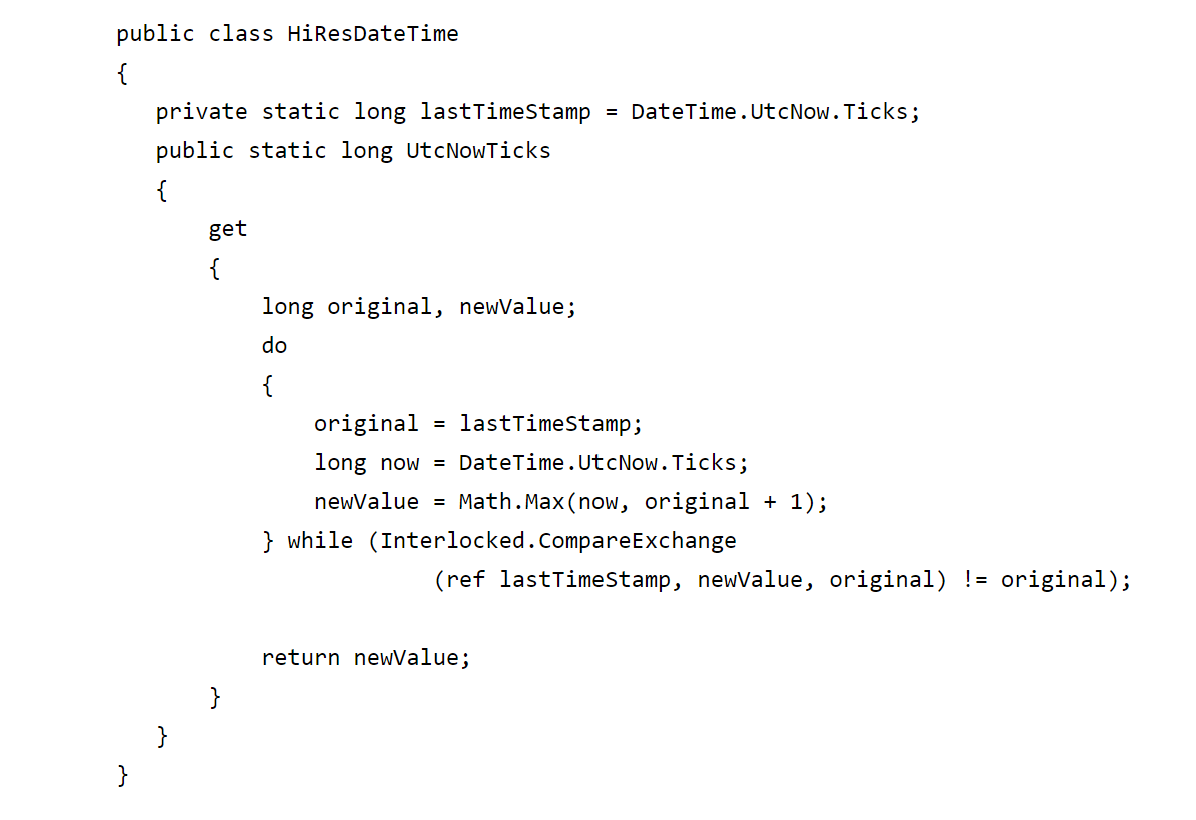

I implemented a re-startable tree scan that uses a couple of DateTime stamps to be able to determine which directories need to be scanned during the current pass and which ones have already been scanned. Any agent can be killed at any time and restarted and it will carry on walking the directory tree right where it left off. It will even continue correctly in the case where you move a volume from one PC to another.

Each agent uses the Parallel Task library's Parallel.ForEach to crawl each volume in parallel and to parse multiple files from each directory simultaneously.

By storing all of the file metadata in Mongo DB it's easy to use Map-Reduce to calculate some interesting statistics for the files on the network.

For example, to create a summary of file sizes I can use a Map function:

function Map() { if (this.Size && this._t == "FileInformation") { var size = this.Size; if (size < 1024) emit ("kb", {count:1, size:this.Size}); else if (size < 1024\*1024) emit ("mb", {count:1, size:this.Size}); else if (size < 1024*1024\*1024) emit ("gb", {count:1, size:this.Size}); else if (size < 1024*1024*1024*1024) emit ("tb", {count:1, size:this.Size}); else emit ("tb+", {count:1, size:this.Size}); } }

and a reduce function:

function Reduce(key, arr_values) { var count = 0; var size = 0; for(var i in arr_values) { count = count + arr_values[i].count; size = size + arr_values[i].size; } return {count:count, size:size}; }

Map-Reduce operations like this take about 20 minutes to run (on the Atom server with just 4GB of RAM) whereas any query serviced by one of the indexes on the MongoDB collection is almost instantaneous.

I've been using the excellent MongoVue to run simple map-reduce scripts like this and to keep track of how quickly the database is growing.

Map-reduce can also be used to find duplicate files - by emitting the MD5 hash as the key and some information about the file as the value I can find every copy of every file across every computer on the home network.

Since I have the file name and metadata for every file on the home network I can also easily find any file using MongoDB's regex matching feature against the path.

The Hard Parts

For starters you'll need a library that can handle long file names. Then you'll need to fix it to provide at least the functionality that FileInfo and DirectoryInfo give you in .NET.

Next you'll need to learn about reparse-points and hard-links and you'll need to skip over them because with them in place the file system is not a tree; it's a cyclical graph in which a simple crawler will quickly get confused or stuck.

You'll also want to store the NTFS file Id and the unique Volume ID for every file so you can track it when the file is moved or the removable drive is connected to a different computer.

So how well does it work?

This all seems to work really well. Nearly every volume has now been cataloged. It's located about 5M files occupying over 6TB of space. The worst case offender for the number of copies of the same file is 100+. I've used the find feature in MongoDB to find a file I was missing and I'm better able to plan how to arrange directories and file generations across the various hard drives I have.

What's next

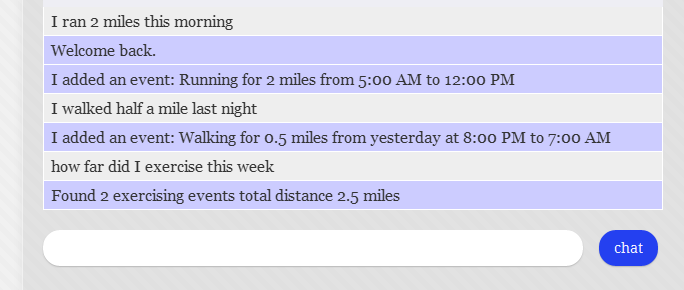

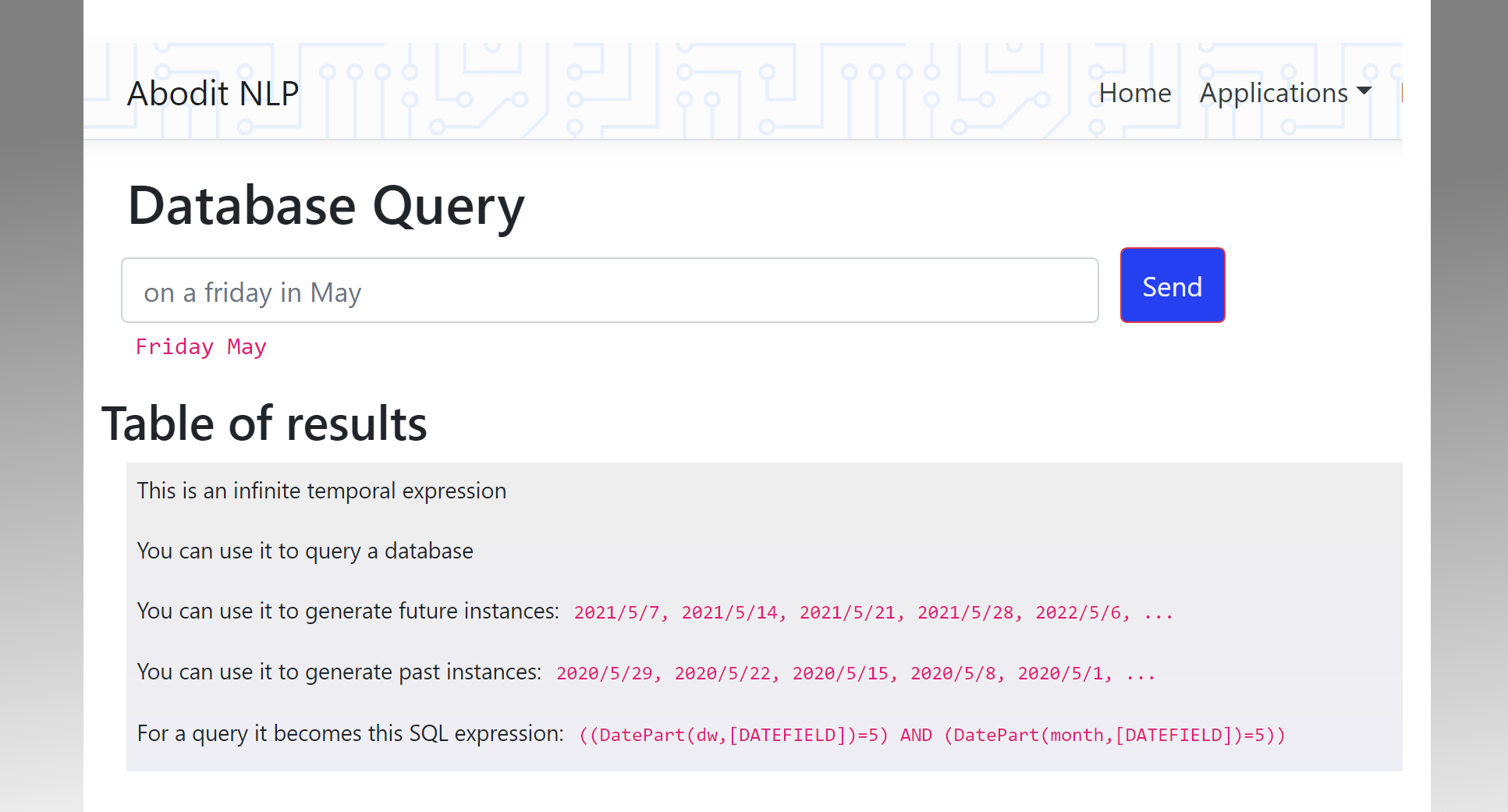

Well, of course this needs to be connected to the home automation system and my Natural Language engine so you can ask "send a copy of IMG_0228 from last week to X" or "where are all the spreadsheets I created last year?" That will be fairly easy.

After that I hope to incorporate backup features into the agents too so they can automatically keep the required number of copies of each file according to its importance. I'd also like to set up a rotating set of external drives that go in the fire safe when not connected and when they are connected they get updated with the latest copies of all the important files.

I'd also like to be able to get the agents to move whole groups of directories around between drives as juggling the directory layout each time a new hard drive is added to the system is always a time consuming process.

Comments or Questions?

Does everyone else have a hard time managing multiple computers, hard drives, directories and multiple copies of files? What tools do you use to do this? Is there anything commercially available that I could have used instead? Would a tool like this be useful to you? Should I publish the code somewhere? Comments and questions are always welcome here or on twitter.