10 reasons my O(n²) algorithm is better than your O(n) algorithm

OK, so it's a controversial title, but the point I want to make is that programmers often get confused about the time complexity, i.e. order O() of an algorithm and performance. They will happily declare that their O(n) algorithm is so much better than someone else's O(n²) algorithm as they rip out some simple, stable, working code and replace it with their vastly more complex, un-tested, but 'optimized' code, often without even measuring to see if there is in fact any performance gain. The fact is that O() notation refers to the asymptotic performance of an algorithm and that in a typical environment with typical data you aren't going to be anywhere close to asymptotic behavior. Performance is, in fact, influenced by many other factors besides the time complexity of an algorithm.

So what factors can make an O(n²) algorithm better than an O(n) algorithm?

1. It might take a very large N before the O(n²) algorithm is slower than the O(n) algorithm. And we aren't talking n=10, n=100, ... here, but perhaps millions or billions before the cross-over in performance is achieved.

2. O() refers to time-complexity not to how long the algorithm actually takes to execute on a particular CPU. For that you need to look at the mix of instructions used and how long each of those instructions takes. But that in itself is a gross-simplification as we shall see next ...

Even if the two algorithms have a very similar number of operations using similar CPU instructions, they still are not going to run at the same speed:

3. The compiler might be able to apply optimizations to one algorithm that it cannot apply to the other, dramatically boosting performance.

4. The CPU itself might be able to optimize the instruction execution sequence through pipelining or other techniques to dramatically boost performance. A dumb scan of an array for example can be accomplished really quickly by most CPUs because they have been optimized to handle such frequent, repetitive tasks.

5. A simpler O(n²) algorithm might have tighter loops in it than the more-complex O(n) algorithm allowing it to run entirely in on-chip memory, dramatically boosting performance.

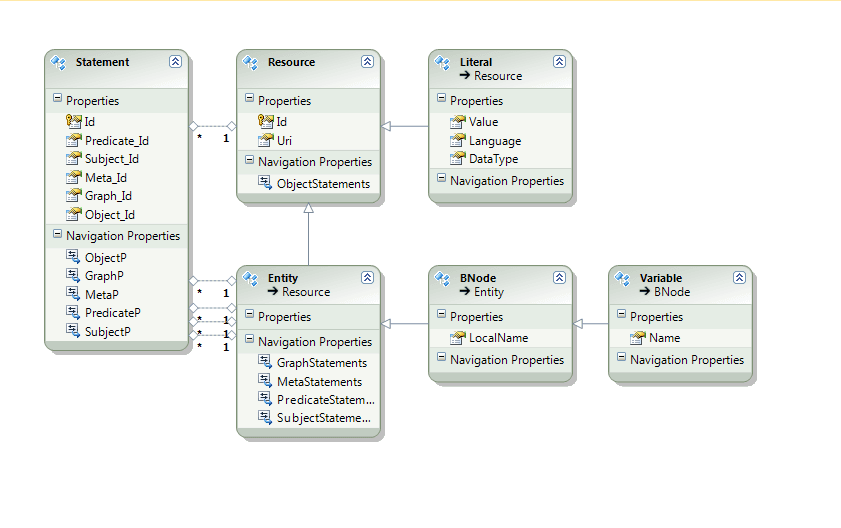

Even if two algorithms have similar operations and similar raw CPU performance they may have different space requirements. The O(n) algorithm might require 10x as much data space to run or maybe a different order of space requirement to the other, e.g. O(n²) space requirement vs O(n) space requirement.

6. The algorithm that requires least data space in the inner loop may find that its data is being held in registers or on-chip RAM during execution and thus receive a significant performance boost.

7. The more space hungry algorithm might have its data paged to disk during operation incurring a huge additional time penalty.

Some algorithms are inherently more parallelizable than others:

8. As we move to a world of multi-core processors it's going to be more important to be able to parallelize code to get performance gains of 4x, 8x, ... PLINQ and other parallel extensions in modern languages can easily be applied to 'dumb' nested for-loops to gain significant improvements in performance. Applying those same speedups to your 'optimized' tree-based code might be much harder or even impossible.

'Better' is a very subjective word, better performance is just one of many issues

9. The better algorithm is probably the one that's easiest to write, easiest for others to read, easiest to test and easiest to maintain. A simple nested for-loop may be 1000x better than some O(n) algorithm when best is defined like this.

10. Even if your algorithm beats mine with all of these factors taken into account that's no guarantee it will do so in the future. Compilers evolve, CPUs get smarter and much of that effort is devoted to making dumb code faster.

Conclusion

Premature optimization is always a bad idea.

Many 'optimizations' can actually lead to worse not better performance.

Always measure, then optimize, then measure again.

It's often cheaper to buy another server than it is to have someone 'optimize' your code.

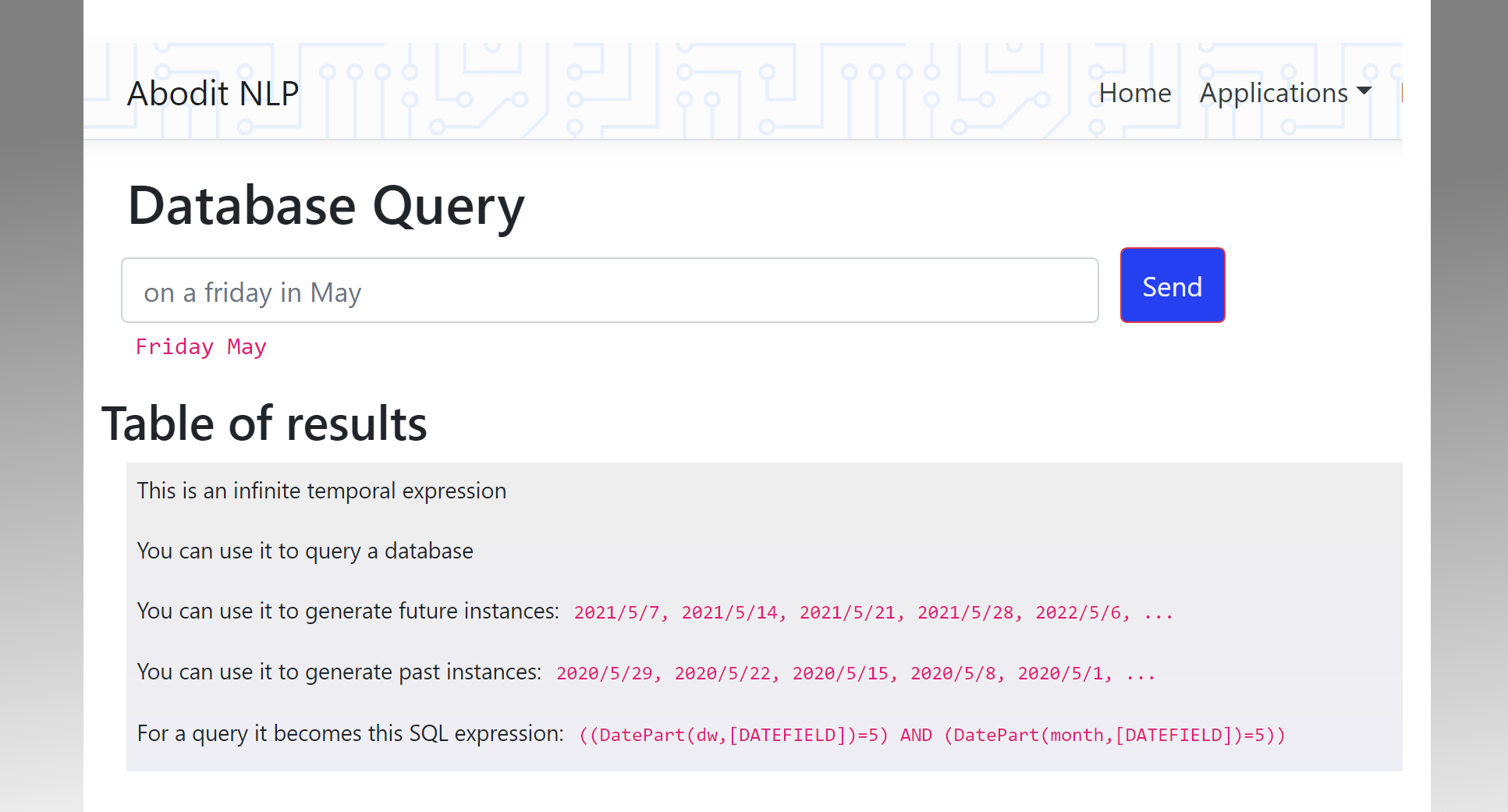

O(n) is a much misunderstood topic

On modern servers space complexity is nearly always more important than time complexity these days. If you can run in on-chip RAM you have a huge advantage.